Online shoppers are usually influenced by customer reviews posted when researching products and services. In a 2011 Harvard Business School study, a researcher found that restaurants that increased their ranking on Yelp by one star raised their revenues by 5 to 9 percent. Reviews can be useful especially when it comes to tourist destinations and “experience” products that you really need to try out. But with studies suggesting that 30% of online product reviews and 10-20% of hotel and restaurant reviews are fake, how do you know which reviews to believe?

Customers are in danger of being misled by millions of “fake” reviews orchestrated by companies to trick potential customers. But even experts are having a hard time identifying deceptive reviews. Websites such as Yelp and TripAdvisor are search engines for a specific category that rely heavily on ratings and reviews. For example, TripAdvisor hosts hundreds of millions of reviews written by and for vacationers. This site is free to use with revenues coming advertising, paid-for links, payments or commissions from the companies. Some businesses try to “buy their way in” to the search results, not by buying advertising slots but by faking online reviews. However, with traditional advertising, you can tell it’s a paid advertisement. But with TripAdvisor, you assume you’re reading authentic consumer opinions, making this practice even more deceiving.

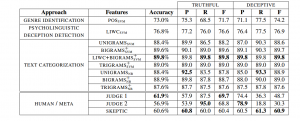

The research work conducted have used three different approaches including part of speech tags (POS), linguistic inquiry and word count (LIWC), and text categorization. The researchers, including a team at Cornell University, have developed sophisticated automated methods to detect the fake reviews. On the left, is an example of how a fake review is identified using strong deceptive indicators that obtained from the above mentioned theoretical approaches. Features from these three different approaches are used to train Naive Bayes and Support Vector Machine classifiers. Integrating work from psychology and computational linguistics, the solution develop and compare three approaches to detecting deceptive opinion spam, and ultimately develop a classifier that is 90%+ accurate. While previous conducted research work has focused primarily on manually identifiable instances of opinion spam, the latest solutions have the ability to identify fictitious opinions that have been deliberately written to sound authentic. While, the solution is quite robust, we believe that there is are possible areas of future work.

The best performing algorithm that the Cornell research team developed was 89.8% accurate (calculated based on the aggregate true positive, false positive and false negative rates). This is in contrast to 61.9% accuracy from the best performing human judge. These results suggests that TripAdvisor could realize substantial improvements if they implement this algorithm in weeding out false reviews. However, it is important to note that the effectiveness shown in this study may not be replicated in an actual commercial setting. As soon as the algorithm is put into production, it is likely that spammers will start to reverse-engineer the rules of the algorithm, creating ever more realistic fake reviews. Additional study would be needed to determine if the algorithms are able to keep up.

TripAdvisor advertises “Zero Tolerance for Fake Reviews.” They currently use a team of moderators who examine reviews; this team is added by automatic algorithms. Though they do not publicly discuss the algorithms used, they state that their team dedicates “thousands of hours a year” to moderation. To the extent that improved algorithmic tools are both more accurate and less costly than human moderation, the commercial upside for Trip Advisor is substantial.

While using text is a good starting point, other metadata could help flag fake reviews. For example, how many reviews the user has, and the authenticity of those other reviews. Filtering like this faces the problem of fakers adjusting their writing based on what gets screened in and out. If certain words appear more genuine, fakers may adopt those words. Companies screening need to keep their identification methods secret. Making the model adaptive to identify the changing behavior of fakers is more challenging than this static model, as the model needs to identify what fraudsters will do vs. they do today.

Sites like TripAdvisor may not have clear incentives to remove fake reviews, as even fake reviews provide more content to show to visitors. Misclassifying genuine reviews as fake would lead to complaints from users and businesses. Users and businesses may have an expectation reviews should be truthful, and may stop using a site if they rely on too many reviews they find to be fake, but how strict a threshold companies should apply when screening.

By: Team Codebusters

Sources:

http://www.hbs.edu/faculty/Publication%20Files/12-016_a7e4a5a2-03f9-490d-b093-8f951238dba2.pdf

http://www.nytimes.com/2011/08/20/technology/finding-fake-reviews-online.html

http://aclweb.org/anthology/P/P11/P11-1032.pdf

https://www.tripadvisor.com/vpages/review_mod_fraud_detect.html

http://www.eater.com/2013/9/26/6364287/16-of-yelp-restaurant-reviews-are-fake-study-says

8|