A Judicial COMPAS

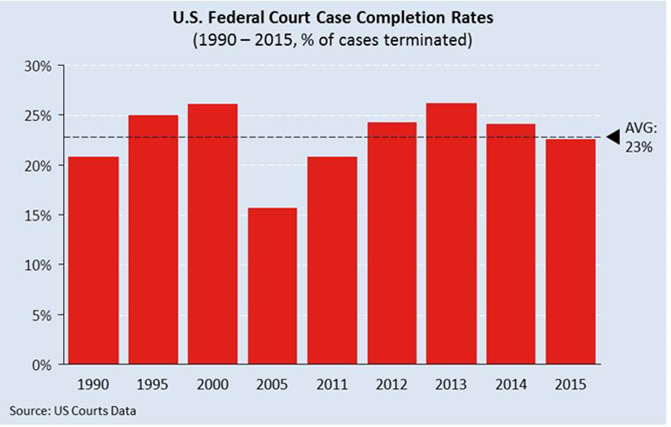

About 52,000 legal cases were opened at the federal level in 2015, of which roughly 12,000 were completed. This case completion rate of 22% has remained virtually unchanged since 1990 and what this simple analysis shows is that the US court system is severely under-resourced and overburdened. Understanding this phenomenon explains the rise of COMPAS. Today, judges considering whether a defendant should be allowed bail have the option of turning to COMPAS, which uses machine learning to predict criminal recidivism. Before software like this was available, bail decisions were made largely at the discretion of a judge, who used his or her previous experience to determine the likelihood of recidivism. Critics of this system point out that the judiciary had systematically biased bail decisions against minorities, amplifying the need for quick, efficiency, and objective analysis.

Statistics In Legal Decision Making

Northpointe, the company that sells COMPAS, claims that their software removes the bias inherent in human decision making. Like any good data-based company, they produced a detailed report outlining the key variables in their risk model and experiments validating the results. Despite using buzzwords like machine learning in their marketing material, there’s nothing new about using statistical analysis to aid in legal decisions. As the authors of a 2015 report on the Courts and Predictive Algorithms wrote: “[over] the past thirty years, statistical methods originally designed for use in probation and parole decisions have become more advanced and more widely adopted, not only for probation and bail decisions, but also for sentencing itself.”

Finding True North

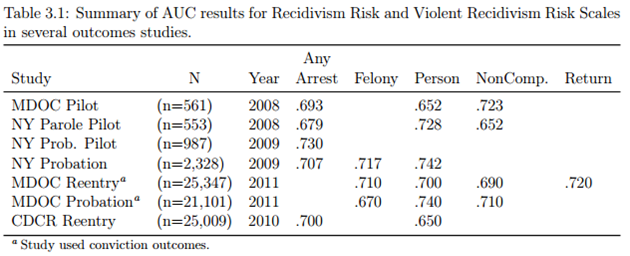

Northpointe uses Area Under Curve (AUC) as a simple measure of predictive accuracy for COMPAS. For reference, values of roughly 0.7 and above indicate moderate to strong predictive accuracy. The table below was taken from a 2012 report by Northpointe and shows that COMPAS produces relatively accurate predictions of recidivism.

Yet, a look at just the numbers ignores a critical part of the original need for an algorithm: to introduce an objective method for evaluating bail decisions. Propublica, an independent nonprofit that performs investigative journalism for the public good, investigated whether there were any racial biases in COMPAS. What the organization found is “that black defendants…were nearly twice as likely to be misclassified as higher risk compared to their white counterparts (45 percent vs. 23 percent).” Clearly there’s more work to be done to strike the right balance between efficiency and effectiveness.

Making Changes to COMPAS

Relying on algorithms alone can make decisions makers feel safe in the certainty of numbers. However, a combination of algorithms could help alleviate the inherent biases found in COMPAS’ reliance on a single algorithm to classify defendants by their risk of recidivism. An ensemble approach that combines multiple machine learning techniques (e.g. LASSO or random forest) could not only help address the racial bias pointed out above but could also help address any other factors that decisions could be biased on such as socioeconomic status or geography. In addition, if the courts are going to rely on algorithms to make decisions on bail, the algorithms should be transparent to the defendant. This not only allows people to fully understand how decisions are being made but also allows people to suggest improvements. This latter point is particularly important because the judiciary should have a vested interested in a fair system that is free from gaming, which could occur in the absence of transparency.

Sources:

- https://www.nytimes.com/aponline/2017/04/29/us/ap-us-bail-reform-texas.html

- https://www.nytimes.com/2017/05/01/us/politics/sent-to-prison-by-a-software-programs-secret-algorithms.html

- http://www.law.nyu.edu/sites/default/files/upload_documents/Angele%20Christin.pdf

- http://www.northpointeinc.com/files/technical_documents/FieldGuide2_081412.pdf

- http://www.uscourts.gov/sites/default/files/data_tables/Table2.02.pdf

- https://www.propublica.org/article/how-we-analyzed-the-compas-recidivism-algorithm

8|