The NextGen Solution to Your Perfect Photo Needs

The Problem / Opportunity

The US photography market has $10 billion in annual revenue. A number of startups and large technology companies have aimed to improve both professionals and amateurs’ photographs (especially those taken for social media), principally by focusing on post-production services, achieving valuations in the hundreds of millions. To our knowledge no technology company has focused on the pre-production photography component, leaving significant open space for our company.

According to a recent National Geographic’s 50 Greatest Pictures issue, “a photographer shoots 20,000 to 60,000 images on assignment. Of those, perhaps a dozen will see the published light of day”. Photography is an art that depends on a number of factors – timing, weather, sun exposure, angle, and more – all of which lend to the unfortunately ephemeral nature of the perfect snapshot. This problem creates a great opportunity for a tool that can decrease the amount of time, energy, and planning needed to capture the optimal image.

Solution & Data Strategy

Focus Pocus creates a solution that can track, identify, and predict the best locations for a photo, enabling casual and professional photographers to see where they should navigate to in order to capture their ideal shot. This solution will be made available as a downloadable app on the user’s phone. In future iterations, Focus Pocus may be installed natively into wifi-enabled cameras.

Focus Pocus solves the problem of finding and taking the ideal shot by crowdsourcing the best possible photograph locations and conditions, relying on large amounts of publicly available data combined with sensor data (from users’ cameras/input) and guiding the user through the photo setup process.

First, Focus Pocus will integrate with photo-sharing platforms like Instagram, Flickr, Google Photos, and 500px to identify publicly available photographs that are either (1) highly popular or (2) of a high quality for the area you are located. Highly popular photographs on social media can be measured by how frequently each photo is clicked, shared, or liked. High quality photos can be identified using deep learning photo-scoring algorithms that can identify good photographs based on characteristics such as clarity, uniqueness, color, etc.

Next, Focus Pocus will identify which ideal shots are available to you and what settings or angles you need to use in order to achieve them, based on your camera type, time of day, lighting conditions, and other user-specific data.

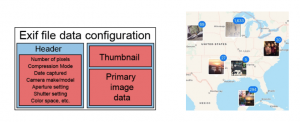

Most of this data is available; photographs taken using non-phone cameras will typically contain large amounts of technical metadata under the Exchangeable Image File Format which includes the following:

- Date and time taken

- Image name, size, and resolution

- Camera name, aperture, exposure time, focal length, and ISO

- Location data, lat/long, weather conditions, and map

Over time, after initial training and being provided with photo datasets, Focus Pocus can continue to map out the entire city, with the goal of handling everything from recommending tourists photo spots to internally setting up the camera with the right specs and using AR to position the camera at the right height and distance from the subject. Tracking sunlight and weather conditions by utilizing training data to identify the best locations using current time and conditions can also be developed (by using integrations like LinkedIn and Rapportive).

Pilot & Prototype

The project lends itself to piloting at trivial cost in a single city before scaling to other cities. As a pilot, we would set up sensors (light and weather sensors, cameras, etc.) at a handful of highly-trafficked photo locations in Chicago, determined by assessing geospatial photo density using a service like TwiMap or InstMap. Early candidates would be the Bean, Navy Pier, Millennium Park, and Willis Tower. Focusing on these locations initially would also make it easier to market the product with concentrated advertising or founding employees giving demonstrations on-site.

From there we would develop a simple mobile photography app for users that would be used to recommend ideal photo locations and also enforce ideal camera settings within the app for a location based not only on the phone’s sensors, but also from our more refined on-site sensors.

Validation

To ensure that our solution meets the objectives of identifying the best locations and conditions for photographs, we could evaluate the predictive power of sensors by benchmarking photographs taken using Focus Pocus against those without. We could also measure the following success metrics:

- Number of Downloads/Installations, Retention Rates, Usage Per Member

In validating market need, we’ve also done research on applications similar to Focus Pocus and have found that businesses, such as Yelp and Flickr have already used deep learning to build photo-scoring models. For instance, by assessing factors, such as depth of field, focus and alignment, Yelp is able to select the best photos for its partner restaurants. However, this use case is after-the-fact (i.e. after the photo has already been taken), whereas the solution we propose allows for actions to be taken to optimize photo quality before it’s taken.

Sources

https://engineeringblog.yelp.com/2016/11/finding-beautiful-yelp-photos-using-deep-learning.html

https://digital-photography-school.com/1000-shots-a-day-the-national-geographic-photographer/

Jin, Xin, et al. “Deep image aesthetics classification using inception modules and fine-tuning connected layer.” Wireless Communications & Signal Processing (WCSP), 2016 8th International Conference on. IEEE, 2016.

Aiello, Luca Maria, Rossano Schifanella, Miriam Redi, Stacey Svetlichnaya, Frank Liu, and Simon Osindero. “Beautiful and damned. Combined effect of content quality and social ties on user engagement.” IEEE Transactions on Knowledge and Data Engineering 29, no. 12 (2017): 2682-2695.

Datta, Ritendra, and James Z. Wang. “ACQUINE: aesthetic quality inference engine-real-time automatic rating of photo aesthetics.” In Proceedings of the international conference on Multimedia information retrieval, pp. 421-424. ACM, 2010.

https://www.crunchbase.com/organization/magisto

https://www.crunchbase.com/organization/animoto

https://hackernoon.com/hacking-a-25-iot-camera-to-do-more-than-its-worth-41a8d4dc805c

Team Members

Siddhant Dube

Eileen Feng

Nathan Stornetta

Tiffany Ho

Christina Xiong